Since I’ve been writing on the topic of workflows and tooling, I thought I’d give one of my favourite packages, Guild AI, a shout-out, and well-deserved exposure.

Guild AI is designed for managing machine learning experiments. It provides ways to track experiment runs, make runs reproducible, and compare runs with different hyperparameters, all in ways that are non-intrusive. Those who know me know I care a lot about my tools, and this is one of those tools that really spark joy. It’s no surprise that the author of this package, Garrett Smith, comes from a systems engineering background. Garrett is also highly responsive on Slack and the community site my.guild.ai, actively listening to the users, and provides support.

The Competition

While there is no shortage of machine learning tooling, Guild AI takes a minimal and un-intrusive approach towards instrumenting the training scripts. Take Sacred for example. It requires instrumenting the Python training script with decorators:

from sacred import Experiment

ex = Experiment('iris_rbf_svm')

@ex.config

def cfg():

C = 1.0

gamma = 0.7

@ex.automain

def run(C, gamma):

iris = datasets.load_iris()

# ...

Scalar logging for Sacred is also a custom module within Sacred, and visualizing these scalars is done through custom frontends like Omniboard.

Guild AI cleanly uses default Python functionality, allowing one to

get started without any modification to training scripts. This also

makes Guild a nice, non-committal choice. I use command-line

arguments from argparse as configuration flags. Guild AI also has

first-class support for TensorBoard, which is a popular and powerful

choice.

Experiment Tracking with Guild AI

Each run of the training script is tracked, by running them through the intuitive guild CLI. The run processes are tracked by Guild.

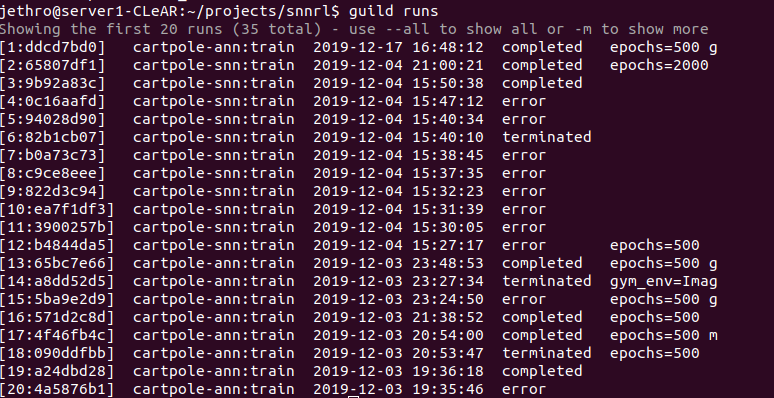

Figure 1: guild runs shows experiments I’ve run

Guild even ships with a beautiful web interface that allows you to

view run files (model checkpoints, logs etc.), accessible via guild view.

Comparing Runs

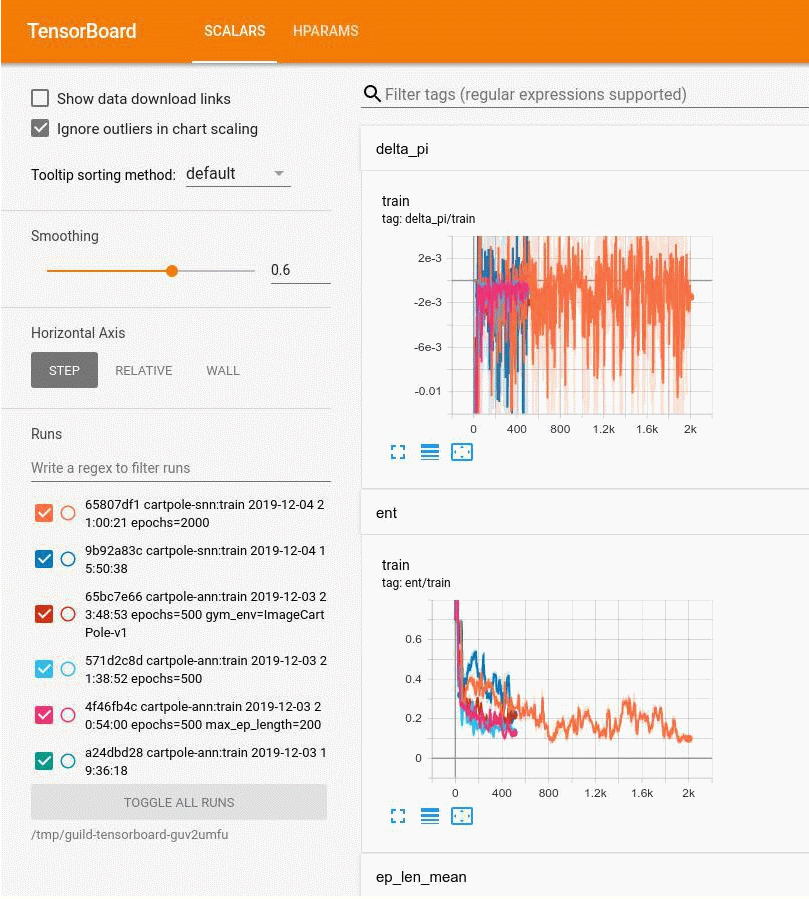

I use guild tensorboard -C to load TensorBoard for all my completed

runs (-C).

jethro@server1-CLeAR: ~/projects/snnrl$ guild tensorboard -C

Preparing runs for TensorBoard

TensorBoard 2.1.0 at http://server1-CLeAR:52675 (Press CTRL+C to quit)

Figure 2: TensorBoard scalars across multiple runs

It even supports the TensorFlow HParam view, which is designed for comparing runs with differing hyperparameters.

Guild AI supports a SQL-like filtering interface to allow the user to choose which runs to load and compare.

Hyperparameter Search

Guild AI supports multiple methods for hyperparameter search:

- Grid search

- Random search

- Bayesian Optimization

This can be specified easily via the CLI:

guild run train.py x=[-0.5,-0.4,-0.3,-0.2,-0.1]

Hyperparameter search and reproducibility is especially important in Reinforcement Learning, and Guild has made this extremely simple.

Deployment

Since I’m using Guild AI within a research project, I’m not using these features. But Guild AI supports packaging, allowing for model collaboration, sharing and reuse.

Conclusion

I’m pleased with Guild AI as is, and the author is actively working on bringing improvements to the tool. I highly recommend machine learning practitioners to give it a whirl.